How Our Growth Team Operates: 3 Frameworks for $120M Pipeline

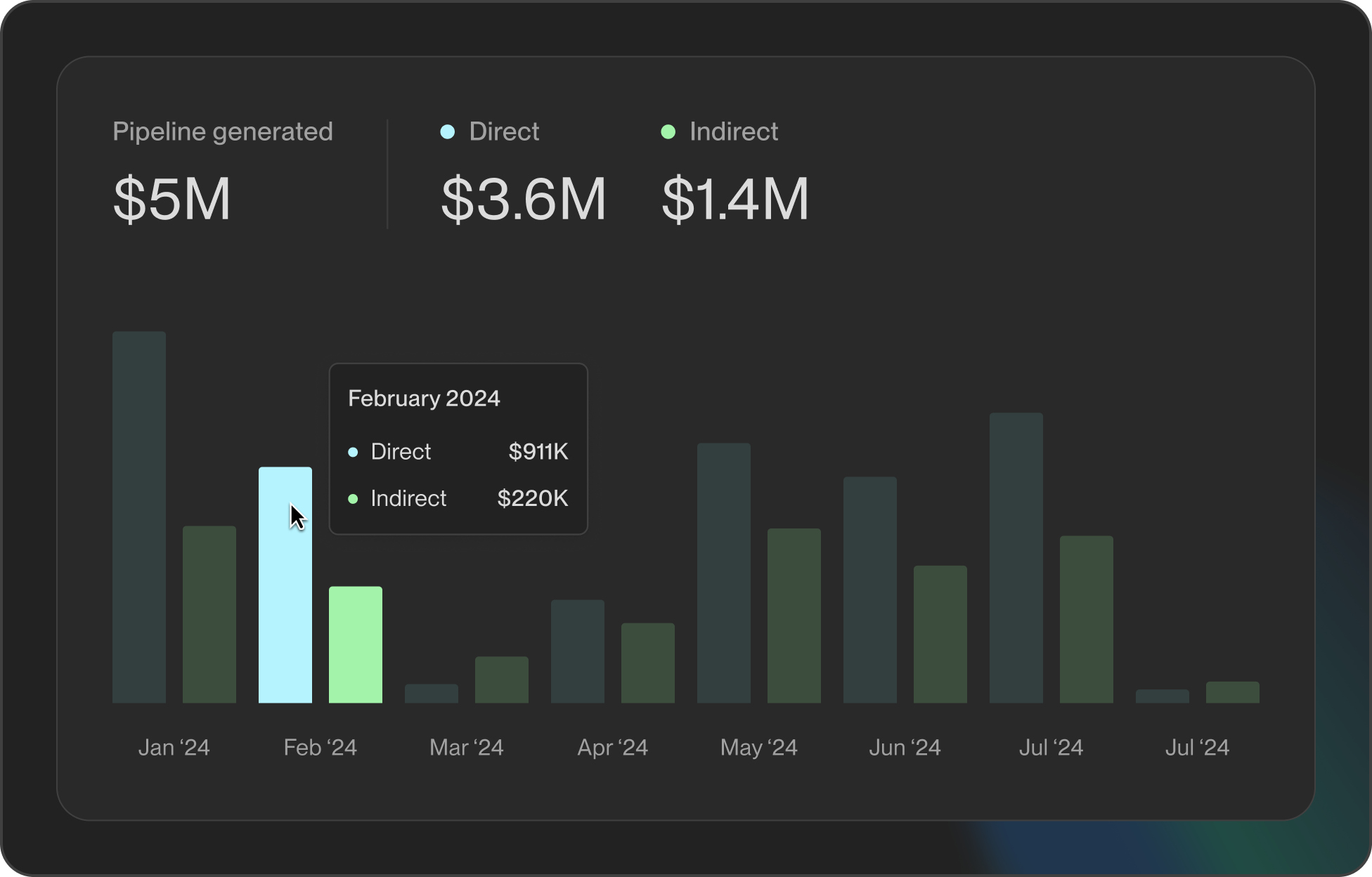

Having a growth team is one thing – running it effectively is another. In our experience, the way the team operates is a huge factor in its success. At Unify, we attribute a big part of our ~$120M annual pipeline generation to a few simple but powerful operating frameworks. These structures keep our small growth team focused, fast, and accountable. Here are the three frameworks underpinning how we execute:

1. Weekly Sprints

We run the growth team on weekly sprints, a practice borrowed from engineering. Every Monday, we kick off a new sprint where each team member commits to 1-2 specific growth experiments or projects for the week. We set clear goals for those initiatives (e.g. “launch Landing Page A/B test” or “execute LinkedIn outreach campaign to X segment”). The following Monday, we review what got done, the results, and then plan the next sprint.

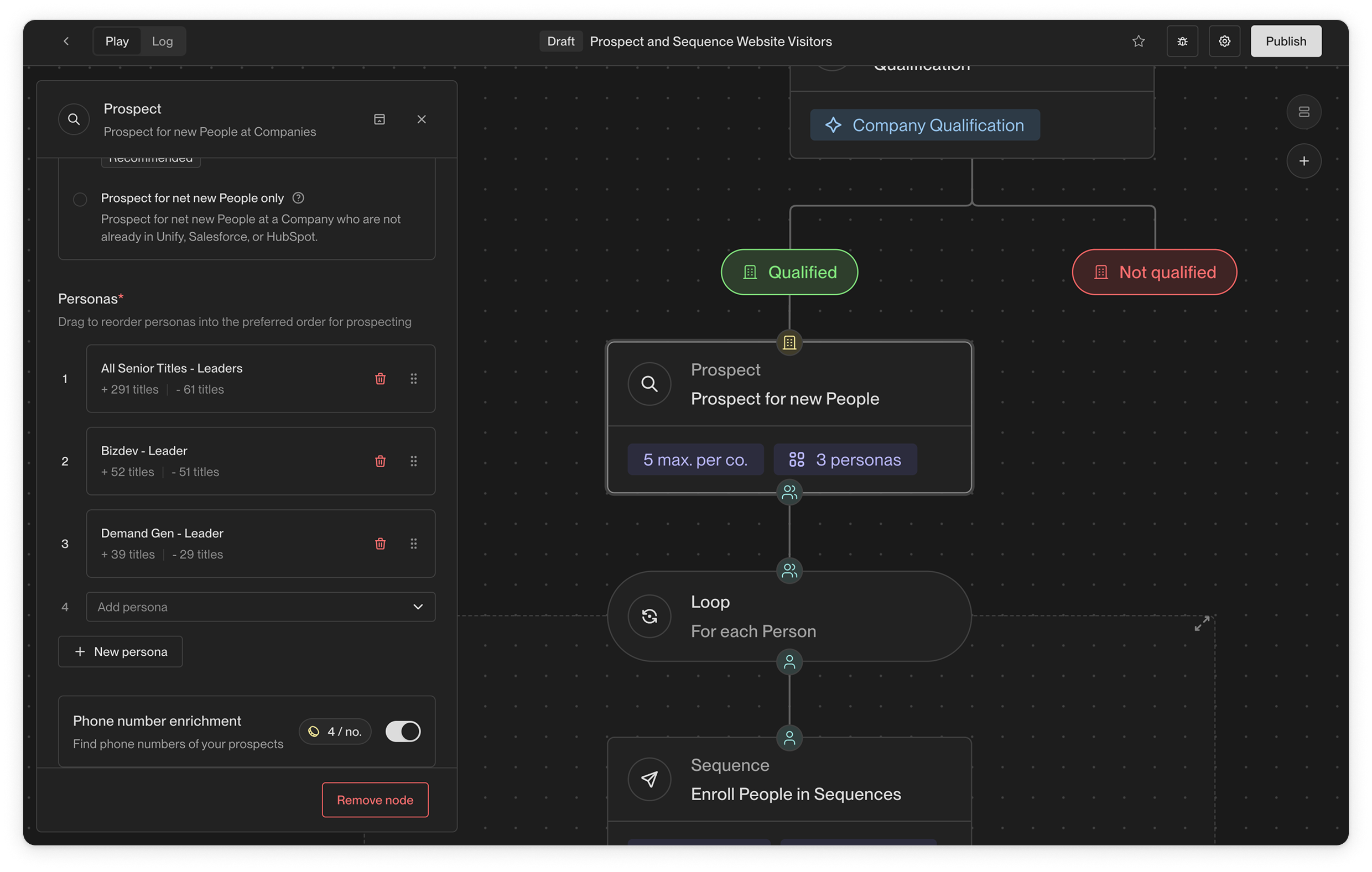

This weekly cadence creates a culture of fast iteration and accountability. By breaking our ambitious growth objectives into bite-sized weekly goals, we maintain momentum and catch issues early. It also forces prioritization – if something didn’t make this week’s sprint, it’s explicitly on the backlog. Early on, for example, when we launched our automated outbound program, we ran ~50 micro-campaigns in the first month, then doubled down on the top-performing few while continuing to test new ideas each week. Sprints create that balance of exploiting winners while still exploring new opportunities.

2. Experiment Specs

Any meaningful growth initiative we tackle begins with a brief “experiment spec.” This is typically a one-pager (we actually write them in Notion) outlining the hypothesis, the proposed experiment or campaign, the metrics we’ll measure, and the expected impact versus effort. It’s akin to a mini product spec, but for growth ideas.

Requiring a spec does two things: First, it forces us to clarify our thinking before we invest time and resources. If we can’t explain why we believe an idea will move the needle, we probably shouldn’t do it. Second, it creates a record of what we did and what we expected. After the experiment, we update the spec with results and learnings. This way, we build an institutional memory of what experiments we’ve run and their outcomes.

The specs are lightweight – sometimes just a few bullet points – but they impose discipline. For example, when we wanted to try a new outbound email sequence targeting a certain industry, we wrote a quick spec hypothesizing that companies in that vertical were more likely to respond to a specific pain point. We outlined the sequence, set a target response rate, and ran it. A week later we had data to compare to our hypothesis. This approach keeps us intentional and data-driven.

3. Ruthless Prioritization

Growth teams are never short on ideas – the backlog of things we could try is endless. The secret to not getting overwhelmed (or diluting your efforts) is a ruthless prioritization framework. We score or rank projects based on potential impact vs. effort (a bit like ICE scoring – Impact, Confidence, Effort). The team tends to tackle the highest impact, lowest effort ideas first, unless there’s a strategic reason otherwise.

We actually log all growth experiment ideas and their scores in Airtable, which serves as our source of truth. Before launching anything, we estimate the upside (e.g. “if this works, it could increase our monthly qualified leads by 20%”) and the effort ("this would take 3 days of dev work"). This helps us compare dissimilar ideas on a common scale. We also define what metric we’re trying to move (pipeline dollars, conversion rate, website traffic, etc.) and how we’ll measure it. The team then picks the best bets for the next sprint.

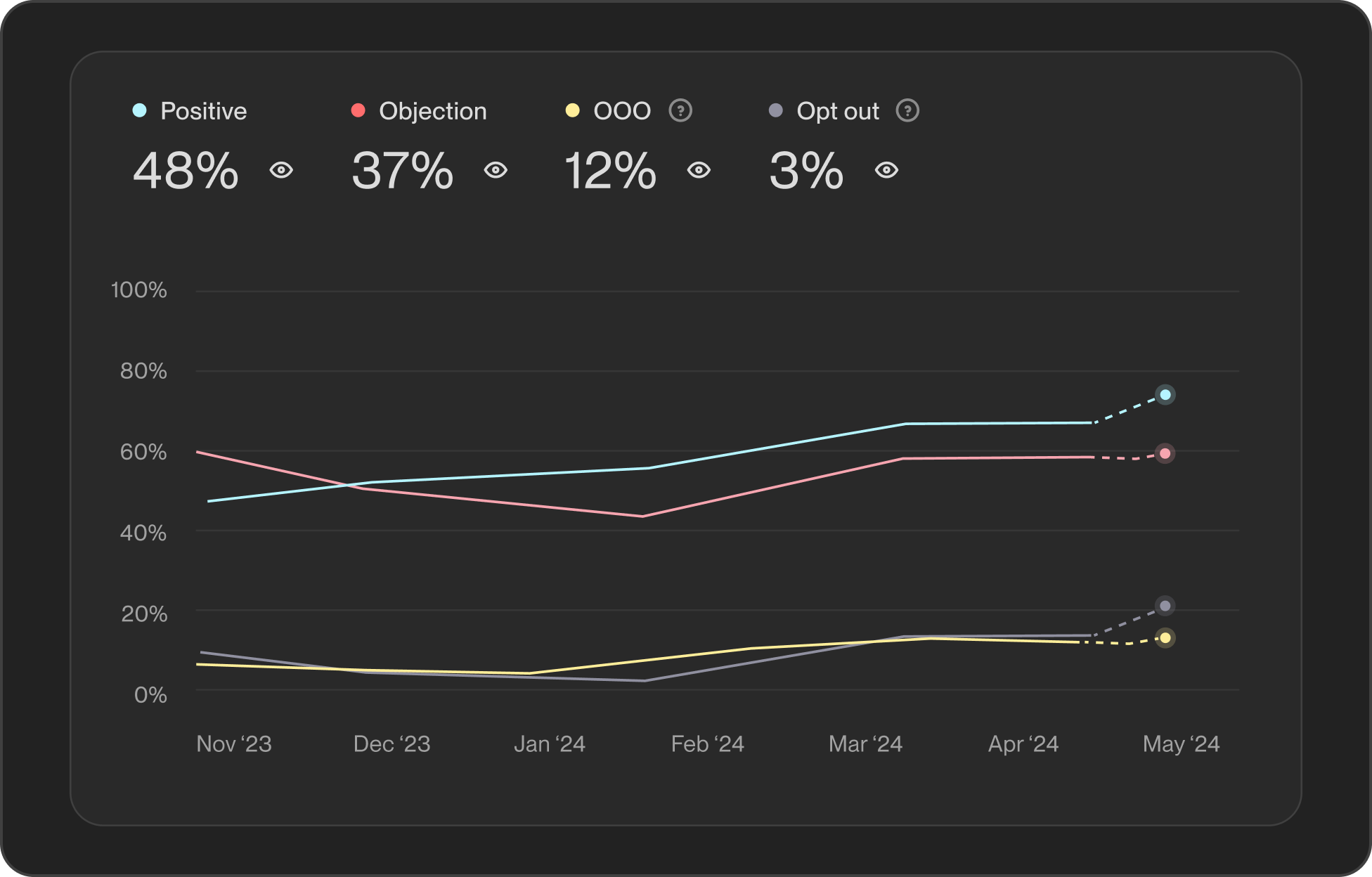

Importantly, we close the loop by tracking results. We use internal dashboards and analytics to see how an experiment performed relative to expectations. That data then informs future prioritization decisions. Over time, this process makes us better at guessing which ideas are worth it – because we have historical benchmarks.

Tying it all together: these three frameworks – Sprints, Specs, and Prioritization – work in tandem. We set a rapid pace with weekly sprints, we ensure each initiative is thought-out with a spec, and we focus on the highest-leverage actions through careful prioritization. The last ingredient is cultural: we encourage creativity and accept failure as part of the process. Not every experiment will be a win, and that’s OK. The goal is to learn quickly. By creating an environment where the team can take swings (and occasionally miss) within a solid operating structure, we’ve been able to continuously uncover growth wins.

As a result, our growth team functions like a well-oiled machine – despite being relatively small, we consistently launch experiments, scale the ones that work, and generate outsized pipeline for the business. If you’re building a growth function, consider borrowing these frameworks. They’ve been invaluable to us in turning ambitious goals into week-by-week execution that moves the needle.

.avif)